Lei Feng network: This article comes from Tencent excellent map, Lei Feng network has been authorized. The three major parts of the face recognition system are introduced, and the reasons for the automatic beauty of the mobile phone camera are analyzed in depth.

This is an era of "looking at the face." When talking about face technology, we are most familiar with face recognition. The technology is active in the financial, social security, education, security and other fields, becoming a star in the AI ​​technology field. Prior to the Tutu WeChat public account, we also focused on the excellent figure face recognition. This article mainly introduces some technologies that silently support face recognition. For more information on face recognition technology, see "Application of Deep Learning in Face Recognition - Evolution of Grandmother Model"

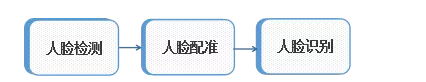

In general, a complete face recognition system consists of three major components, namely face detection, face registration, and face recognition. The three pipeline operations: face detection finds the position of the face in the image, and then the face alignment finds the position of the face, nose, mouth, and other facial organs on the human face. Finally, the facial recognition extraction feature is compared with the face of both faces. Calculate the similarity and confirm the identity of the face.

Figure 1 Face Recognition Process

1. Introduction to face matchingFace Alignment (Face Alignment), also known as face feature point detection and positioning. Face feature points are different from image feature points in the usual sense such as corner points or SIFT feature points. Face feature points are usually a set of artificially defined points (see Figure 2). According to different application scenarios, there are different numbers of feature points, such as 5 points, 68 points, and 82 points.

Figure 2 Commonly used target detection points in the detection and localization of face feature points

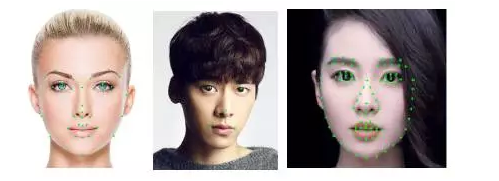

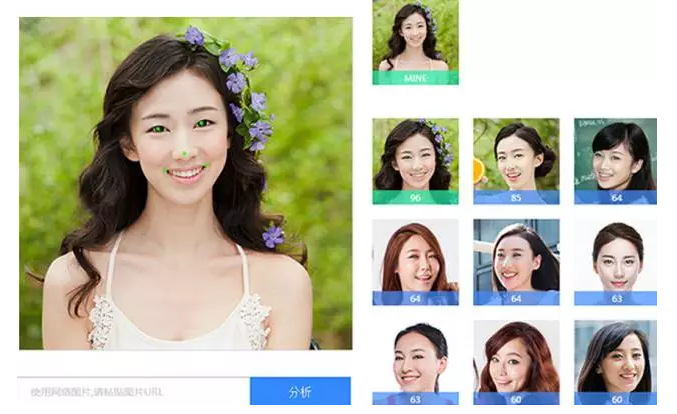

In addition to playing a key role in face recognition systems, face registration technology is also available in 3D face modeling, face animation, face expression analysis, facial beautification and virtual makeup, and facial self-timer effects. A wide range of applications. Make a small advertisement, excellent performance of excellent face tracking registration technology, mainstream mobile phone single frame processing speed can reach less than 3ms, already in the "everyday p map - dynamic self-timer", "mobile QQ-short video", "mobile QQ - Application scenes such as video chat, mobile phone Qzone, action camera, etc.

Figure 3 Face beautification and virtual makeup

2. Research status of face registrationTraditional face registration study

Similar to other face technologies, changes in lighting, head posture, expression, etc., as well as occlusion will greatly affect the accuracy of face registration. However, face registration also has its own characteristics. Firstly, the feature points describe the structure of the face (contours and facial features), the face structure is complete and stable, and the relative positions of facial features are fixed; secondly, the features caused by changes in head posture, expression, and the like. The position of the point changes significantly. Traditional face registration research needs to try to find more accurate feature descriptions to express the combination of the points that are determined and changed, and then select the appropriate optimization solution method according to the descriptors to locate the face feature points.

The most directly used feature descriptors are colors, grayscales, and the use of skin color to detect and locate parts of the human face. It is slightly more complicated to choose various texture feature descriptions, such as face recognition based on Haar-like texture features and Adaboost training cascade classifiers. The above feature description does not consider the positional relationship between the feature points, and thus does not have a reasonable face structure. Active Shape Models (ASM) and Active Appearance Model (AAM) can simultaneously express texture and shape features.

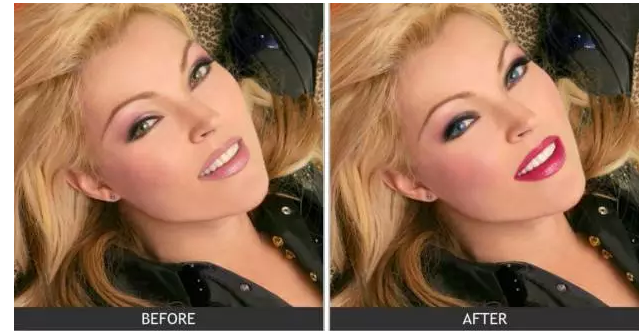

The shape characteristics of both are expressed by the Point Distribution Model (PDM). Figure 4 shows the statistical distribution of face feature points in 600 face images. The red dot represents the mean of each feature point. The texture features of each feature point of the ASM are represented separately, and a response map corresponding to each feature point is generated by calculating the neighborhood texture information around the feature points. The blue circled area in FIG. 5 is used to calculate the response map, and the red dot indicates the actual human face feature point position. AAM uses the entire face to describe the texture features. By transforming the position of the feature points of the face into the standard shape, the face texture that has nothing to do with the shape is obtained, and the shape-independent face texture is modeled based on the principal component analysis method.

Deep face registration study

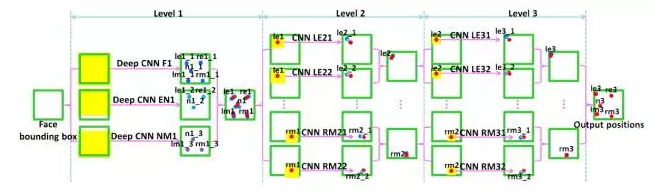

Since 2006, the deep neural network has gradually achieved unprecedented success in many fields such as computer vision, speech recognition and natural language processing, and it also brings a spring breeze to the research on face registration. Scholars no longer have to think about building complex and complicated face descriptors. At present, there are two types of deep face registration methods that are recognized by the industry in the academia. Cascaded concentric network face registration (Cascade CNN) and multi-task deep face registration.

As shown in Figure 6, Cascade CNN consists of three levels, each containing multiple convolutional networks. The first level gives an estimate of the initial point position, on this basis, the fine adjustment of the position of the feature points in the next two levels. Multi-tasking registration will be performed simultaneously with the training of other related face attributes. The attributes associated with facial feature points include head poses, expressions, etc. For example, the mouth of a smiling face is likely to be open, and the face features are symmetrically distributed. Multitasking helps to improve the accuracy of feature point detection and positioning. However, different tasks have different convergence speeds and difficulties, and the difficulty of training increases. At present, the academic world provides two solutions to adjust the training process of different tasks: task-wise early stopping criterion and parameter dynamic control mechanism.

Figure 6 Cascade CNN network model

3 excellent figure face registrationFace registration in different application scenarios

The research on face registration in the academic world is changing with each passing day. The requirements for technology in product applications in the industry are also increasing. Different scenarios require different requirements for face registration.

The core problem of face recognition business is the alignment of high-level semantics between pixels of face images, that is, the positioning of key face feature points. Incorrect feature positioning can lead to serious deformation of extracted face description features, which in turn leads to a decrease in recognition performance. In order to better support face recognition, we have increased the scope of changes in the face frame to reduce the dependence on the size of the face detection frame. We choose five points for face feature points to ensure certain face structure description capabilities and reduce the impact of registration errors on face recognition.

Figure 7 Face Recognition

Beauty needs ultra-high-precision positioning of facial features, such as the eyelashes in eye makeup. Only accurate positioning can achieve a natural beauty effect. In order to provide accuracy, we use a cascading model to first roughly locate facial features and then fine-grained the facial features.

Figure 8 smart beauty makeup

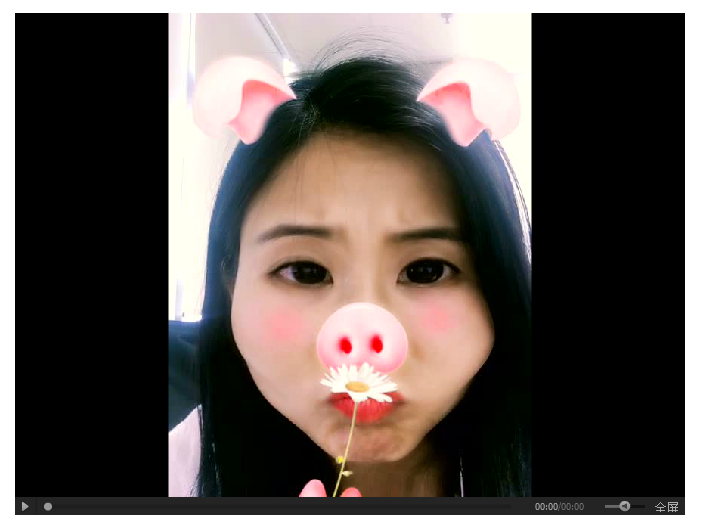

The face self-shooting effect is used to process mobile video, and the processing speed of registration is required to be strict. The traditional face registration technology does not have the ability to determine whether the tracking is successful. To avoid the phenomenon of loss during tracking (tracking to non-face areas), it must rely on the face detection that takes a long time, our face allocation Qualified face recognition is added to reduce the reliance on face detection. In addition, we adopted a squat deep neural network and applied SVD decomposition to model compression and algorithm acceleration. The algorithm model is controlled at 1M, and the processing time on mainstream mobile phones only takes 3ms. The model size and calculation speed are the highest standards in the industry.

Video 1 face selfie special effects

The update of Uygur's face registration

Unitech Labs continues to follow up on technology trends and updates. Excellent image face registration technology migrates from traditional methods to deep learning methods. From the latest academic research results to the best engineering choices, we have made numerous innovations and attempts after several rounds of iterative updates. In April 2013, version 1.0 of Face Registration was released. The facial features were roughly located. The 2.0 version of the precise positioning after 4 months was also successfully released and used in interesting products. The accuracy of the later version 3.0 has been greatly improved, while landing in beauty products. Version 4.0 began to apply deep learning methods, the accuracy has been further improved, and the average precision exceeds the artificial level. In May of this year, we released the latest version 5.0 using a deep multitasking learning method, which has been greatly optimized in both speed and depth network model sizes. The mainstream mobile phone has a frame rate of over 200, a model of 1M, and comes with a face recognition function. The self-timer effect mentioned in the introduction has been supported by this version.

4. Follow-up R&D planIn the future, on the one hand, we will focus on improving the user experience of applications that are already on the ground. On the other hand, we will actively explore new application scenarios. At present, the face tracking effect of self-timer video is still insufficient. To solve this problem, improving user experience depends on further research on how to improve the stability and accuracy of face registration. In addition to the applications that have already been mentioned in this article, Youtu's face registration technology can also be applied to many fields such as smart access control systems, Internet financial cores, and live broadcast industries. In the new application field, researching how face matching technology meets new needs is another issue that we will surely face.

Lei Feng Network (search "Lei Feng Net" public concern) Note: This article published by Lei Feng network, if you need to reprint, please contact the original author, and indicate the source and author, not to delete the content.