What support is needed for the 8K rush?

As display technologies evolve, so does resolution. From the rise of 1080P in the LCD era to the 4K boom in OLED devices, resolution has consistently improved. Higher resolution theoretically leads to sharper images, but on smaller screens, the difference between 2K and 4K can be nearly imperceptible to the average user.

High resolution on small screens brings new challenges

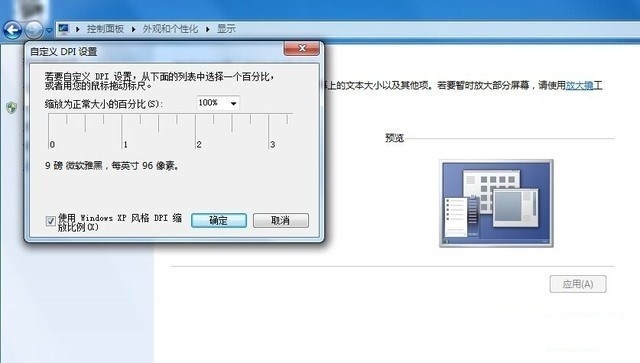

Therefore, upgrading resolution must go hand-in-hand with increasing screen size. Otherwise, high resolution could actually hurt the viewing experience. For example, a 32-inch 4K display may make text and icons too small to be practical, which isn’t ideal for either Windows or macOS systems.

High resolution demands strong hardware support

High-resolution content requires more storage, faster transmission, and better processing power. Even today, most PCs struggle with 4K displays, requiring top-tier graphics cards and high memory capacity. For 8K, two high-end graphics cards would be necessary, which is a costly investment.Storing just a few seconds of 8K content can take up gigabytes, and while TB-class hard drives are affordable, they’re still not enough for large-scale 8K content. Considering all this, 8K products may not be suitable for regular consumers. But what about commercial applications? Could ultra-high resolution have a future there?

Changing perspectives, looking ahead

In the commercial sector, high-resolution screens are valuable. Fields like medicine, retail, and digital signage benefit from the ability to show more detail. And unlike personal computing, commercial systems don’t need to be as powerful—especially when using streaming media, where memory issues can be mitigated with fast networks.

High-resolution in automotive applications

Today, many smart TVs support 4K playback. The Android system used is relatively low-cost. Upgrading such systems can meet the needs of high-quality visuals without excessive costs. With 5G, streaming becomes more feasible, solving memory and bandwidth issues.

Ultra-high resolution may not suit individual users

At the same time, only the commercial sector can afford high-priced display devices. Ordinary consumers may not see the value in 8K unless there's real content support. Features like HDR, on the other hand, offer more tangible benefits for everyday users.Therefore, blindly pushing resolution upgrades without considering user needs is unwise. It's similar to how Nokia focused on phone quality and battery life but missed the shift to smartphones. People care more about whether a product meets their needs than just its specs.

Poe Adapter,Ubiquiti Poe Adapter,Carrier Poe Adapter,Poe Power Adapter

Guang Er Zhong(Zhaoqing)Electronics Co., Ltd , https://www.geztransformer.com